Meta’s AI chief Yann LeCun, recently published a position paper, A Path Towards Autonomous Machine Intelligence discussing paths to machine intelligence, discussing the animal and human models for machine.

He led with three questions, “How can machines learn to represent the world, learn to predict, and learn to act largely by observation? How can machine reason and plan in ways that are compatible with gradient-based learning? How can machines learn to represent percepts and action plans in a hierarchical manner, at multiple levels of abstraction, and multiple time scales?”

He discussed learning world models and described different modules he believes make humans observe the world, including the configurator module, perception module, cost module, short-term memory module, and others. He submitted how to design and train a world model with self-supervised learning.

In an interview with ZDNet describing the paper, he said, “You know, we’re not to the point where our intelligent machines have as much common sense as a cat. So, why don’t we start there? What is it that allows a cat to apprehend the surrounding world, do pretty smart things, and plan and stuff like that, and dogs even better?”

Human and animal intelligence are based on thought or a form of thought. It is what is used to relate with the world [or observe and interact]. Intelligence, consciousness, experience and the rest are elements of thought—with different properties, expressing states and extents.

How can machine acquire what looks like thought, or close to, to mimic what humans do? Cars don’t understand what it means to crash or crumple, not because they don’t have sensors, or neural networks, pattern recognition, or computer vision, but that the way the brain processes fear [sense-new identity-memory-react] is not what they bear.

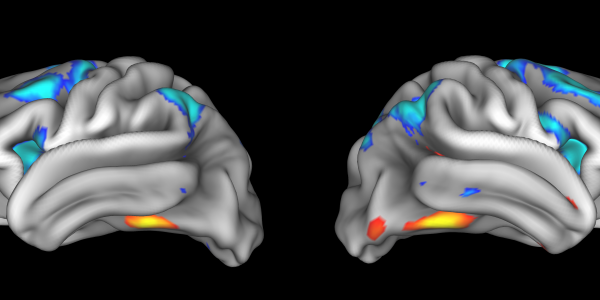

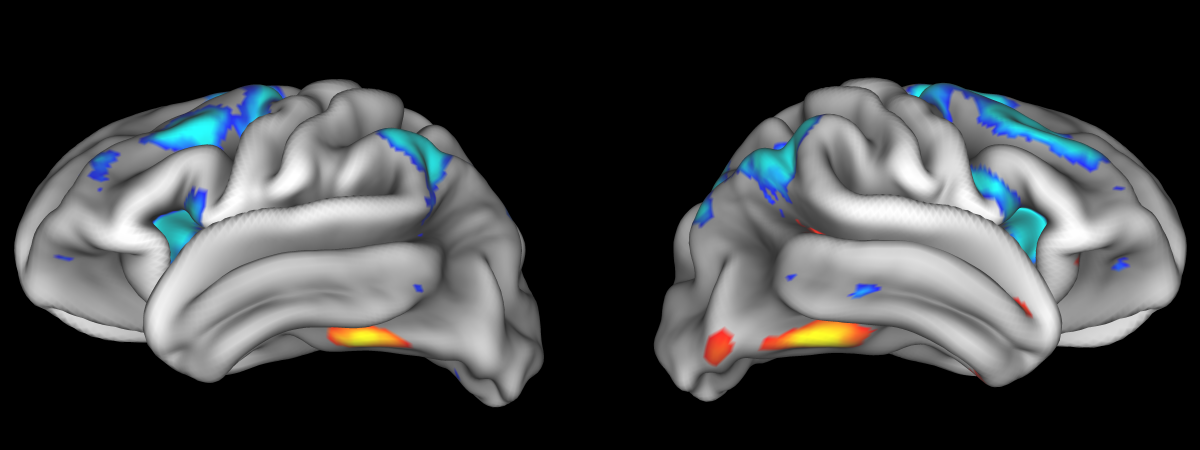

In brain science, when things are sensed they go to the thalamus, or the olfactory bulb—for smell, for processing or integration, before relay to the cerebral cortex for interpretation. It is processing and interpretation that makes overall determinations for all experiences.

It is theorized that sensory integration or processing is into a uniform unit or identity, which is thought or a form of thought. It is what senses become before relay for processing.

So whatever is seen, smelled, touched, heard or tasted is no longer what they were, they become a new identity, representation, equivalent or uniformity. It is in this form that relay is made for interpretation.

Interpretation is postulated to be knowing, feeling and reaction. Knowing is memory. Memory stores thought in large and small packages, where small ones relay in sequences to large stores. Small stores can be prioritized or pre-prioritized. Large stores have a principal spot where just one goes to dominate.

It is what happens in memory that decides what feelings follow before reaction. What is known is what mostly causes fear or panic. There are people who would smell something deemed dangerous elsewhere but because they don’t know, it would mean nothing to them, same for things seen, heard and so on.

It is thought or its form that relays across and central to intelligence.

This is why AI is weak, not because of the lack of models, but the lack of thought, not working memory, or predictive coding, or several other labels.

AI simply lacks thought, as the construct of neural networks.

How can AI have a form of thought? Or starting with autonomous vehicles, how can they know fear by having a fluid memory of crumples and crashes, so that as inputs come into sensors, it goes to locations to understand risks and consequences, not outsourcing its fear to humans.

Nvidia, recently announcing its new GeForce RTX 4090 gaming GPU and its Jetson Orin Nano system-on-module for robotic development, has an opportunity to stretch into thoughts, to exceed current approaches to machine intelligence.